For me, Windows11 is the best shell of Linux. I´m using it in my home and work and for that reason I´m using WSL2 to run my Linux and dev station (python, scala,…), so there is a trick to avoid the problem of the Git credential store in WSL2. The trick is to configure the credential helper of the git inside WSL2 as the windows11 credential helper.

Continue reading

When you work with Azure Machine Learning, you are not required to work with Azure Machine Learning portal. Since Azure Machine Learning libraries supports to work connected with your workspace through the official library, you can benefit from containers to create an entire dev container to develop and deploy your code into Azure Machine Learning Service.

Continue reading

I had the pleasure and honor to be speaker in the past codemotion world conference talking about #DeepLearning and #Azure.

Tittle: Applied Deep Learning state-of-the-art

Subject: In this session we will see how to do transfer learning over a state-of-the-art pre-trained model like RoBERTa to achieve our own goal in Named Entity Recognition with our own DataSet. We will take and label new data to finally feed the network trying to achieve transfer learning. Then we will make use of the new fine tuned weights and use FastAPI to deploy our new fine tuned model to kubernetes to make inference.

Here is the video of my talk:

Continue reading

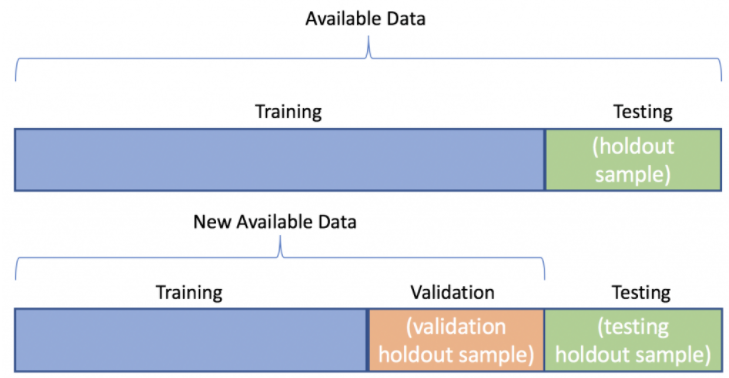

When you work with text data, you often want to split it into training and test sets. This is something very usual in machine learning and also in deep learning´s natural language processing. The problem is that you have to split the data in a way that is consistent with the training and test sets, but at the same time you want to keep your data consistent.

NOTE: img source

Continue reading

There was a nasty bug in huggingface’s tokenizers that caused a random runtime error depending on how you deal with the tokenizer when processing your neural network in a multi-threading environment. Since I´m using kubernetes I was able to fix it by allowing the pod to be scheduled again, so I didn´t paid too much attention to it because it was related to the library itself and it was not my code the one that caused the bug.

NOTE: Details here

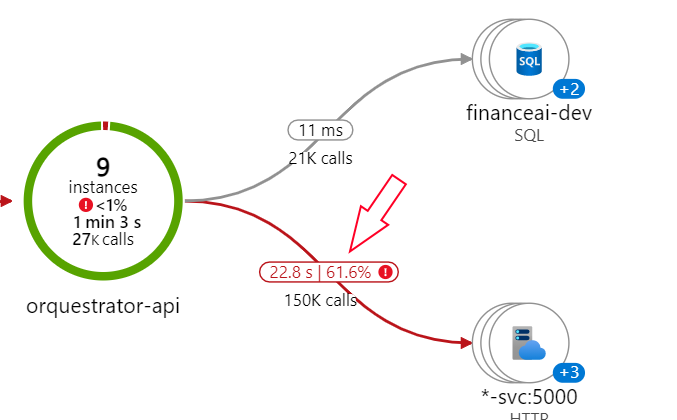

But then, while checking my AppInsights I found something very bad :). I found that the bug that I thought was occurring “sometimes” was happening all the time:

This is the error rate of my backend with the transformers version 4.6.0:

Continue reading